This is guide for installing and configuring an instance of Apache Spark and its python API pyspark on a single machine running ubuntu 15.04.

-- Kristian Holsheimer, July 2015

Table of Contents

1.1 Install Java

1.2 Install Scala

1.3 Install git

1.4 Install py4j

2.1 Download source

2.2 Compile source

2.3 Install files

3.1 Hello World: Word Count

In order to run Spark, we need Scala, which in turn requires Java. So, let's install these requirements first

1 | Install Requirements

Apache Spark download for windows After download, you will see the spark file like this. To unzip the file, you need to have 7-zip exe. You can dowload it from. Tour Start here for a quick overview of the site Help Center Detailed answers to any questions you might have Meta Discuss the workings and policies of this site.

1.1 | Install Java

Check if installation was successful by running:

The output should be something like:

1.2 | Install Scala

Download and install deb package from scala-lang.org:

Note:You may want to check if there's a more recent version. At the time of this writing, 2.11.7 was the most recent stable release. Visit the Scala download page to check for updates.

Again, let's check whether the installation was successful by running:

which should return something like:

1.3 | Install git

We shall install Apache Spark by building it from source. This procedure depends implicitly on git, thus be sure install git if you haven't already:

1.4 | Install py4j

PySpark requires the py4j python package. If you're running a virtual environment, run:

otherwise, run:

2 | Install Apache Spark

2.1 | Download and extract source tarball

Note:Also here, you may want to check if there's a more recent version: visit the Spark download page.

2.2 | Compile source

This will take a while... (approximately 20 ~ 30 minutes)

Pyspark Download

After the dust settles, you can check whether Spark installed correctly by running the following example that should return the number π ≈ 3.14159...

This should return the line:

Jupyter Spark Windows

Note:You want to lower the verbosity level of the log4j logger. You can do so by running editing your the log4j properties file (assuming we're still inside the ~/Downloads/spark-1.4.0 folder):

and replace the line:

by

2.3 | Install files

Add this to your path by editing your bashrc file:

Add the following lines at the bottom of this file:

Restart bash to make use of these changes by running:

If your ipython instance somehow doesn't find these environment variables for whatever reason, you could also make sure they are set when ipython spins up. Let's add this to our ipython settings by creating a new python script named load_spark_environment_variables.py in the default profile startup folder:

and paste the following lines in this file:

3 | Examples

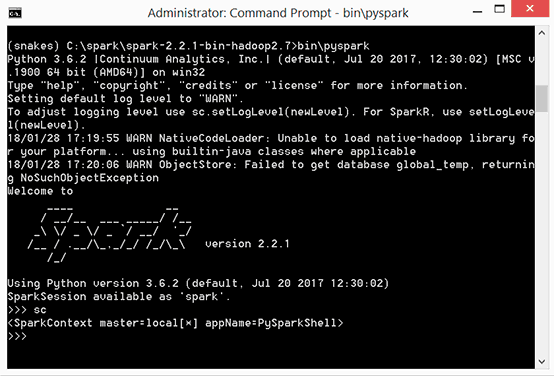

Now we're finally ready to start running our first PySpark application. Load the spark context by opening up a python interpreter (or ipython / ipython notebook) and running:

Download Spark

How To Install Pyspark

The spark context variable sc is your gateway towards everything sparkly.

3.1 | Hello World: Word Count

Check out the notebook spark_word_count.ipynb.